Two weeks ago I experienced IPv6-less life for a week thanks to my ISP, but my ISP provided me this week an experience of what IPv6-only life looks like.

The start of the IPv6-only experience (bit before 6am)

I wake up usually crazily early in the morning (by nerd standards, at any rate), if it is light out there. And in Finland, at summer, it gets light quite early so I must have woken up a bit before 6 am or so. I usually start working (or tinkering with some hobby project) even before breakfast, so I hopped on my computer.

That particular morning it did not go quite as smoothly as usual. While my Matrix server (and client) were happy enough, most of my things that depended on incoming connectivity (such as Matrix message delivery from outside the home) were not working and my monitoring tooling looked like Christmas tree with some green and quite a lot of red.

Most of the web stuff did not work particularly well either, e.g. I could get to Oracle Cloud login page, but login did not complete to my home region (it just hung when contacting some hostname that looked suspiciously like IPv4 address encoded in DNS).

After some head-scratching I noticed that ping did not work from my home either, and that basically IPv4 was not working at all. IPv6 was working partially, but as I had not populated IPv6 address for my home services to the DNS, the inbound connectivity was solely IPv4 (and not happening) and that is why e.g. Matrix was not receiving anything new.

At the time what confounded me was that I attempted also my cellular fallback connectivity, and same thing happened; IPv4 did not simply work. Perhaps I had slept over the announcement where it had been retired? Were both of my laptops broken?

’Infrastructure is undergoing maintenance’

Around 6 am I got around to looking at my ISPs web page (kudos to them, it worked over IPv6), and they noted explicitly that they had 2am-6am slot for infrastructure maintenance in my neighbourhood. Unfortunately I have cellular connectivity from the same provider, and that seemed to be affected too. And it was already 6 am.

At this point I was getting somewhat annoyed. It was already past 6am, and I couldn’t do most of the things I actually wanted to do in the morning due to either my misconfiguration or the world being not ready for IPv6-only me yet (e.g. web server of our startup did not and still does have IPv6 configured either because nobody has wanted it; same also for Kubernetes load-balancer..).

I have IPv6, I can tunnel IPv4 using it.. or can I?

Luckily I was not without resources. I have a Wireguard VPN configured in a VPS in Oracle Cloud, just for cases where I use network which does not support IPv6 (the irony). I attempted to use it, but sadly, the configuration did not work.

I could log in via ssh (IPv6), but Wireguard did not work. I spent some time looking at my Terraform configuration of the Oracle node, and noticed almost immediately that I had Wireguard port whitelisted only for IPv4, and not IPv6, in the Oracle security list for the network the VPS lives in. Unfortunately, this is something I could not change as I could not log in to Oracle console due to lacking IPv4 connectivity and without that I could not apply new configuration. So scratch that idea.

Then I attempted to use SOCKS5 tunnel over SSH, but that for some reason did not work very well with Safari + MacOS (I am still not quite sure why, SOCKS5 setup in it is bit wonky and I usually use Firefox for it but I did not have it installed).

At this point, I had been trying to do something almost an hour, and I was feeling quite frustrated.

The happy end (7am)

I was also starting to get worried, as I do not live alone, and there is quite hard SLA about ‘internet just works’ in our household and we mostly work from home. And the maintenance break was supposed to be done already an hour ago, and no, ISP had not posted an extension of it.

So in the classic ‘have you restarted it?’ troubleshooting mode, I literally just restarted the modem in frustration (which is in bridging mode and should be just passing along everything back and forth).

After minute of tense waiting (this stuff does not start fast), uptime-kuma was happy, and so was I. Crisis averted, and network connectivity was fine once more.

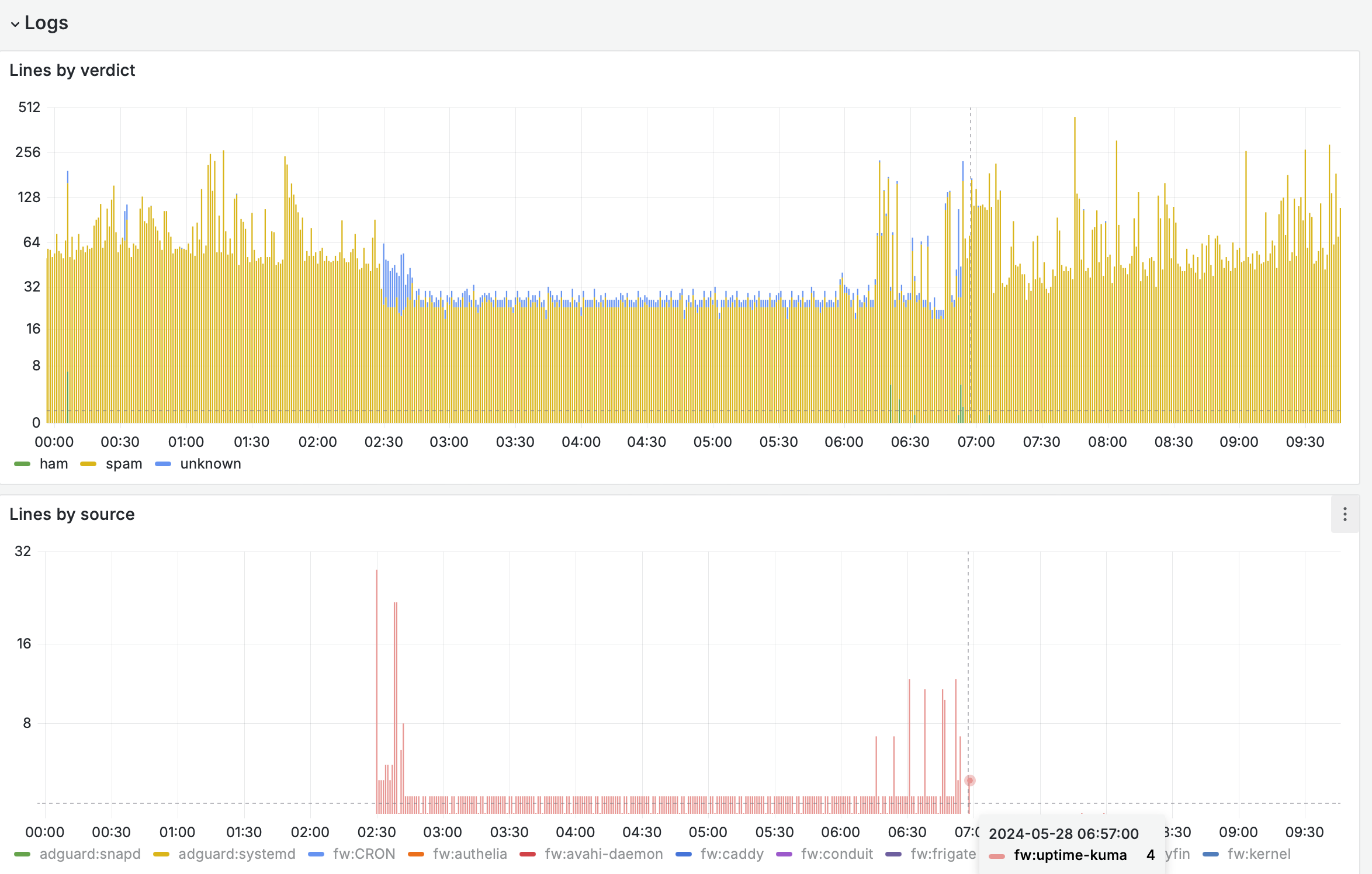

This is what monitoring of logs look like for the period (note logarithmic scale, there wasn’t particularly many log lines in general and some lines I did not have rules for, presumably ones with errors):

Netflow does not have IPv4 between 02:30 and 07:01, but I do not unfortunately still have good visualisation for them, but I am working on it.

I think I learned three valuable lessons from this exercise:

- Test that fallback connectivity actually works (Wireguard server in this case, with IPv6)

- I fixed my Terraform rules, and now I can fire off Wireguard tunnels also over IPv6 to my jumpbox

- Do not use same ISP for main and fallback connectivity

- I knew this from the start, but the combo deals they offer are soo tempting and this is the first parallel downtime in over 4 years of being a customer, so I am not sure if it is worth the hassle of switching

- Ensuring that work is possible over IPv6 might be actually worth checking at some point 😃